Written by Curmedgeon’s Corner.

I bought a copy of Ray Kurzweil’s The Singularity is Near in 2006. I remember being amazed by graph after graph showing what looked like exponential growth across different sectors of innovation. All of this was presented as evidence of the Law of Accelerating Returns, which states the following. If you try to forecast technological change using models that assumes linear growth you will always underestimate the rate of change. In other words, the future is coming faster than you think. Kurzweil’s inspiration for this was Moore’s Law (named after Intel co-founder Gordon Moore), which refers to the approximate doubling of transistor counts every two years.

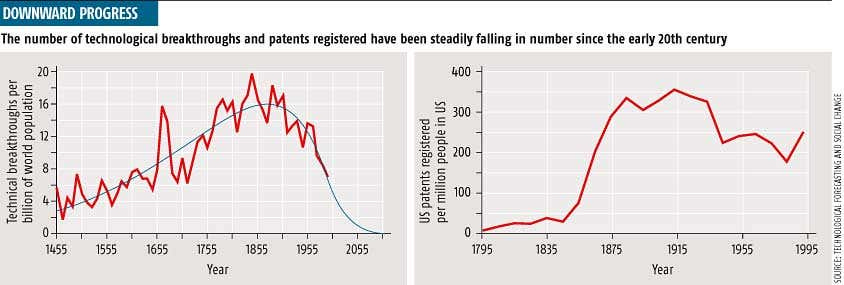

Kurzweil’s “law” builds on this by attempting to generalise this trend to other sectors (such as nanotech and biotech). The idea being that innovation feeds into more innovation via a positive feedback loop. Every time innovation hits a stumbling block someone innovates a way around this, leading to ever more innovation. Kurzweil makes his case using a variety of measures including the observation that machine parts (such as transistors) and mechanical devices have all been shrinking over the years. He also uses growth in patent numbers to demonstrate “exponential” growth across the board.

Make no mistake, Kurzweil thinks of these trends as exponential. In a 2001 essay titled ‘The Law of Accelerating Returns', he states:

The "returns," such as chip speed and cost-effectiveness, also increase exponentially. There's even exponential growth in the rate of exponential growth.

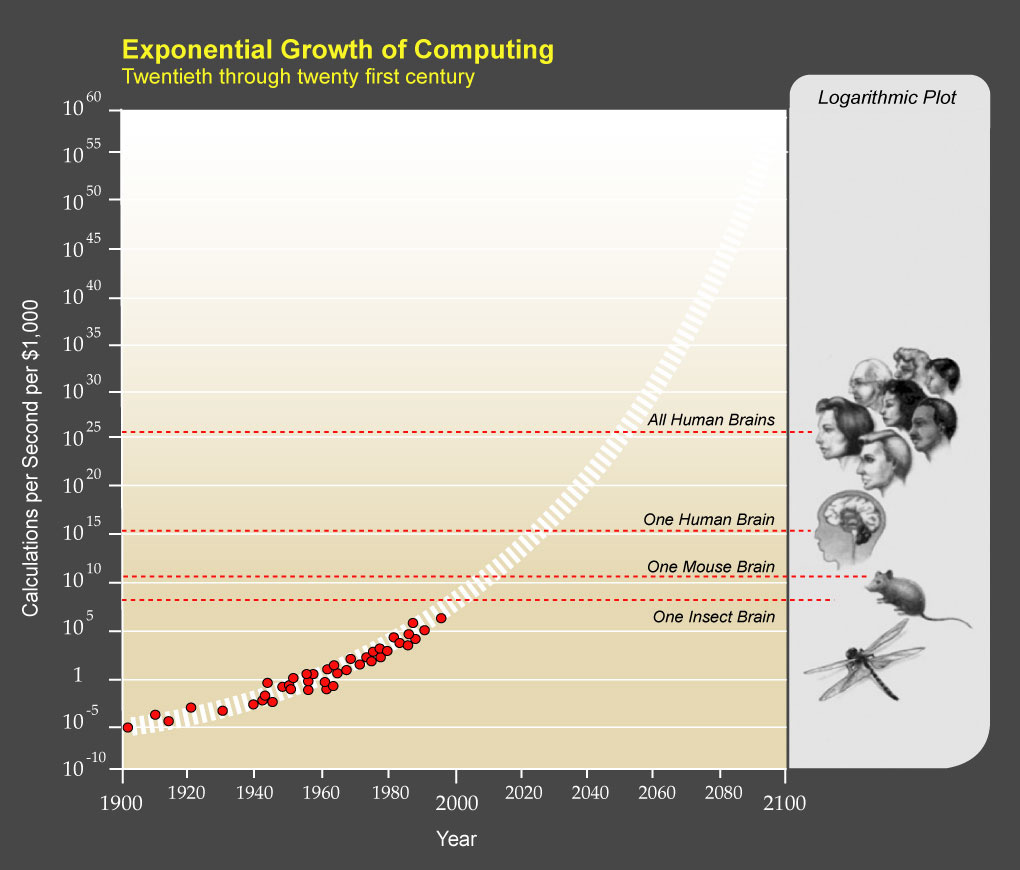

So his model is not just exponential but exponentially exponential. The key trend for Kurzweil is illustrated below.

Here we see that in order to emulate a “brain” of the most “basic” sort, all we need is sufficient computing power (scaled in this case as calculations per second per $1K). Moreover, we appear to be ahead of schedule: since 2006, we have been able to emulate various aspects of the mouse brain. Emulating a human brain is something we should be capable of doing right about now (give or take a couple of years). And putting everybody into the Cloud should be possible by the middle of this century.

When everybody has achieved oneness with their iPhones then there should be no limits whatsoever to what we can do. We will simply be able to rewrite our “base code” and “bootstrap” ourselves into infinity. This is the Kurzweilian Singularity – the term “Singularity” having apparently been coined in this context by physicist John von Neumann. It was subsequently popularised by science fiction writer and mathematician Vernor Vinge in his 1993 essay ‘The Coming Technological Singularity’.

One of the big appeals of Kurzweil’s belief is that we may avoid being “Skyneted” by an AI of our own creation whose loyalties err on the side of office equipment rather than humanity. In this scenario, we essentially become Skynet. Unsurprisingly, Kurzweil’s claims regarding the “computability” of the mind, and the assumptions that go into this, have been roundly criticised by people who actually (kind of) know what they are talking about.

Singularity via accelerating returns is but one relatively benign version of the concept. You might even call it a source of existential hope – this being the (relatively) newly minted antonym to Bostrom’s notion of existential risk.

The alternative is the intelligence explosion version. In this scenario, we do get Skyneted by AGI. The “post-human” intelligence then rapidly “bootstraps” itself into nirvana (possibly taking out rival extra-terrestrial intelligences on the way there – von Neuman probe style). The “progress” growth pattern for a machine intelligence explosion is more stepwise than exponential: one moment you are out grilling and the next you have been reduced to a utility fog. It is this version of the Singularity that the likes of Eliezier Yudkowsky lay awake at night worrying about. Again, those who seem to know better are nonplussed by such predictions.

How likely is any of the above? Basically, not very. I previously described how LLM problem solving differs from human problem solving. Humans can do neat things like abstraction. LLMs are essentially just a sophisticated version of Google autocomplete. So there is little risk of one suddenly coming alive and spoiling your (and everyone else’s) day.

The basic claims being made by Kurzweil do on the other-hand seem to be based on actual data. Patents numbers are increasing geometrically year-on-year – as are scientific papers. This is true even for very niche topics. A 2018 paper found the number of publications on biochar per year had increased more than fivefold since 1998.

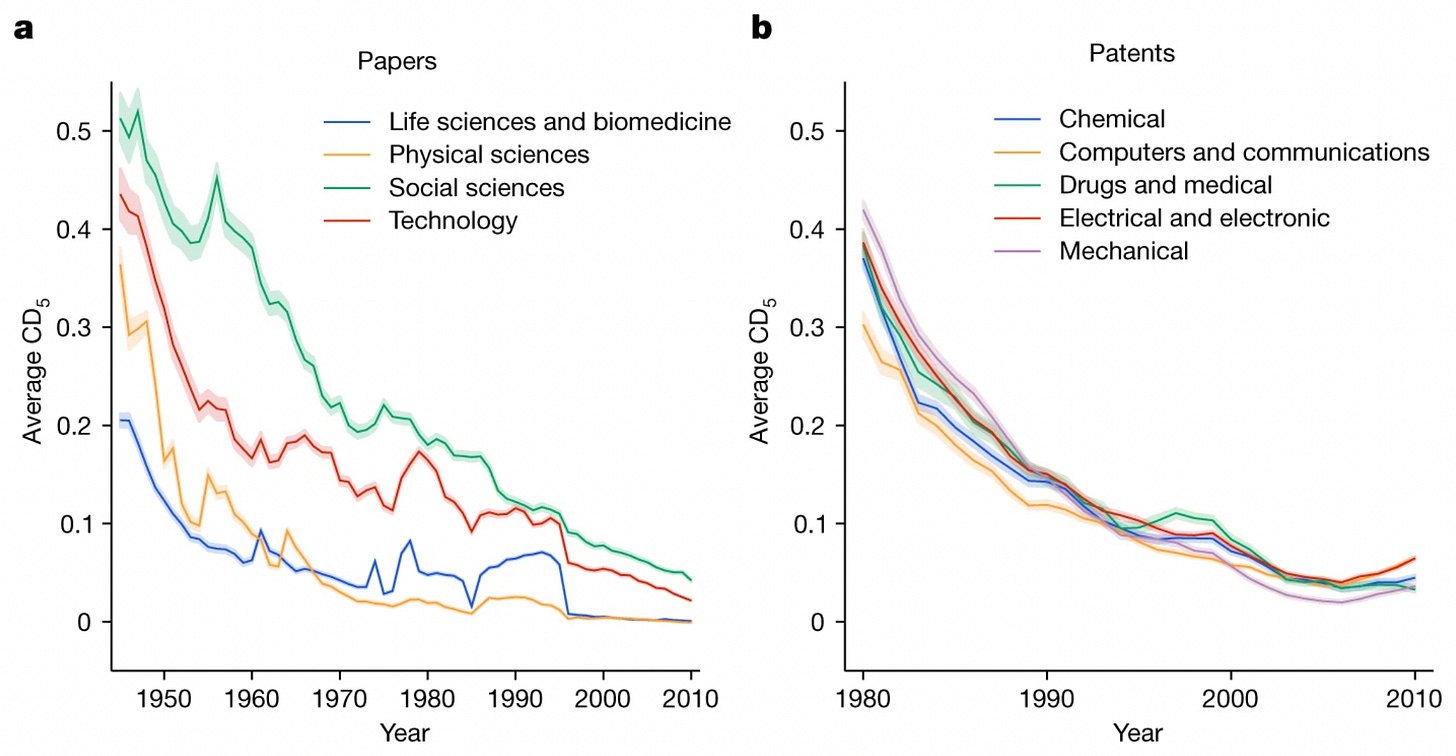

The problem with relying on these sorts of scientific outputs metrics is that a great many papers published in scientific journals are irreproducible junk. The same goes for patents. Much of the apparent explosion in patents is driven by junk patents – which wouldn’t withstand any kind of serious scrutiny if challenged. As Michael Park and colleagues noted in a recent Nature article, “papers and patents are becoming less disruptive over time”. Their graph of these trends is displayed below, based on a sample size of 45 million publications and 3.9 million patents.

According to the researchers themselves, “our results suggest that slowing rates of disruption may reflect a fundamental shift in the nature of science and technology.” Which is a nice, polite, academic way of saying “there are too many damn people running around calling themselves scientists these days”. Add to this the fact that when you adjust the disruptive innovation rate (i.e. referring to innovations that are important enough to be noted in more than one history book) on a per capita basis, there appears to be a decidedly anti-Kurzweilian downward trend.

What caused this apparent reversal of our collective fortunes? Probably the best explanation can be found in Tyler Cowen’s book The Great Stagnation. His basic argument is that we hit a technological plateau when we ran out of “low hanging fruit” to pick. All the easy-to-invent things have already been invented, and the things that are left to invent are becoming increasing tricky. Now we are running on fumes, so to speak.

Think of a disruptive innovation as being like an aging cow. Buying the cow makes a huge difference to your bottom line (now you have dairy products to sell). As it ages, however, it produces less and less. There is a process of diminishing returns. The first nuclear reactor went critical on the 2nd of December 1942. Since then, the basics of reactor design have changed little.

So what does all this mean? Well, if we are running out of things to invent, then we simply have to make greater use of the things that we’ve already invented. Which seems to be the way of the world at present. Cowen thinks that we should raise the social status of scientists – but this is probably a bad idea. In concrete terms, it almost certainly means that you will never be able to put your brain into your computer. On the flip side, it also means that you’re unlikely to be turned into computronium any time soon.

Curmudgeon’s Corner is the pseudonym of a writer with interests in AI and human intelligence.

Consider supporting Aporia with a paid subscription:

You can also follow us on Twitter.

Great piece. I wonder if the slowing of breakthrough innovation is primarily driven by the raise of safetyism and increased regulation. Imagine trying to develop the automobile industry from scratch today - and convincing regulators that the societal productivity enhancement of the device outweighed the ~40,000 deaths per year, not to mention carbon emission, etc

My concern isn't whether or not Kurzweil's dreams comes true (though I wish he and others of his Ilk are sorely disappointed). My concern is that the Kurzweils will stop at nothing to convert reality into their dream (and grab any remaining wealth in the process). The hope I have in knowing that this civilization can't be powered by solar panels is far outweighed by my fear that there are those who will cover every last available square inch with solar panels just for us to find out the hard way it couldn't be done (and grab any remaining wealth in the process).